AI-Powered Deepfake Tools Becoming More Accessible Than Ever

Imagine receiving a video call from your boss, only to realize later that it was a convincing deepfake orchestrated by cyber criminals. This scenario is no longer science fiction but a real threat in today’s rapidly evolving digital landscape.

Cybersecurity is one of the most pressing challenges for businesses in the digital age.

Trend Micro’s latest research reveals a significant increase in the availability of deep fake technology and the sophistication of AI tools in the cybercrime underground. This evolution creates more opportunities for mass exploitation, even by non-technically minded cyber criminals.

Several new deepfake tools on the cybercrime underground promise to create highly convincing but fake videos and images seamlessly. They include:

DeepNude Pro: A criminal service that claims to be able to take the image of any individual and rework it to display without clothes. This could be used for sextortion campaigns.

Deepfake 3D Pro: Generates entirely synthetic 3D avatars featuring a face taken from the picture of a victim. The avatar can be programmed to follow recorded or generated speech. This could be used to fool banks’ KYC checks or used to impersonate celebrities in scams and vishing campaigns.

Deepfake AI: Enables criminals to stitch a victim’s face to a compromising video to ruin the victim’s reputation and/or use it as extortion. Or it could be used to spread fake news. Only supports pre-recorded videos.

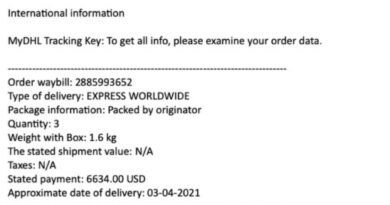

SwapFace: Enables criminals to fake real-time video streams for BEC attacks and other corporate scams.

VideoCallSpoofer: Similar to SwapFace, can generate a realistic 3D avatar from one picture, and have it followed live the movement of an actor’s face. This enables deepfakes to be streamed on video conferencing calls and similar scams, fake news, and other trickery.

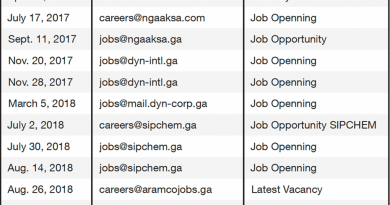

Aside from deepfakes, the report revealed the re-emergence of defunct criminal LLM services like WormGPT and DarkBERT, which are now armed with new functionality. They’re being advertised alongside new offerings, such as DarkGemini and TorGPT, that offer multimodal capabilities, including image-generation services.

However, the report noted that many of the ChatGPT-lookalike services offered on the cybercrime underground are little more than “jailbreak-as-a-service” frontends designed to trick commercial LLMs into providing unfiltered responses to malicious queries.

It’s also true that cybercriminals have generally adopted malicious generative AI tools relatively slowly. That’s most likely because current tactics, techniques, and procedures (TTPs) work effectively enough without introducing new technology.

With the increasing sophistication and frequency of cyberattacks, traditional security measures are not enough. Businesses need to proactively test their systems and networks for potential weaknesses and fix them before threat actors exploit them. Individuals should be cautious of unsolicited communications and verify the authenticity of online interactions.

To read the full report, Surging Hype: An Update on the Rising Abuse of GenAI , please visit: https://www.trendmicro.com/vinfo/us/security/news/cybercrime-and-digital-threats/surging-hype-an-update-on-the-rising-abuse-of-genai

Read More HERE