Celebrating 20 Years of Trustworthy Computing

20 years ago this week, Bill Gates sent a now-famous email to all Microsoft employees announcing the creation of the Trustworthy Computing (TwC) initiative. The initiative was intended to put customer security, and ultimately customer trust, at the forefront for all Microsoft employees. Gates’ memo called upon teams to deliver products that are “as available, reliable and secure as standard services such as electricity, water services, and telephony.”

Protecting customers is core to Microsoft’s mission. With more than 8,500 Microsoft security experts from across 77 countries, dedicated red and blue teams, 24/7 security operations centers, and thousands of partners across the industry, we continue to learn and evolve to meet the changing global threat landscape.

In 2003, we consolidated our security update process into the first Patch Tuesday to provide more predictability and transparency for customers. In 2008, we published the Security Development Lifecycle to describe Microsoft’s approach to security and privacy considerations throughout all phases of the development process.

Of course, the Trustworthy Computing initiative would not be where it is today without the incredible collaboration of the industry and community. In 2005, Microsoft held its first-ever “Blue Hat” security conference, where we invited external security researchers to talk directly to the Microsoft executives and engineers behind the products they were researching.

Today, the Microsoft Security Response Center (MSRC) works with thousands of internal and external security researchers and professionals to quickly address security vulnerabilities in released products. Over the past 20 years, MSRC has triaged more than 70,000 potential security vulnerability cases shared by thousands of external security researchers and industry partners through Coordinated Vulnerability Disclosure (CVD) we’ve since issued more than 7,600 CVEs to help keep customers secure.

Beginning in 2011 with the first Bluehat Award, we have rewarded more than $40 million through the Microsoft Bug Bounty Program to recognize these vital partnerships with the global security research community in over 60 countries.

The security journey that began with TwC has involved many thousands of people across Microsoft and the industry. To celebrate 20 years of this commitment, partnership, and learning in customer security, we’re sharing the thoughts and stories of some of these employees, industry partners, experts, and contributors that helped make this journey possible.

—Aanchal Gupta, VP of Microsoft Security Response Center

The genesis of Trustworthy Computing

In 2001 a small number of us “security people” started moving away from “security products” to think more about “securing features.” Many people think of ‘security’ as security products, like antimalware and firewalls. But this is not the whole picture. We formed a team named the Secure Windows Initiative (SWI) and worked closely with individual development teams to infuse more thought about securing their features.

It worked well, but, it simply wasn’t scalable.

David LeBlanc and I talked about things we had found working with various teams. We noticed we got asked the same code-level security questions time and again. So, we decided to write a book on the topic to cover the basics so we could focus on the hard stuff.

That book was Writing Secure Code.

During 2001, a couple of worms hit Microsoft products: CodeRed and Nimda. These two worms led some customers to rethink their use of Internet Information Services. Many of the learnings from this episode went into our book and made the book better. The worms also caused the C++ compiler team to start thinking about how they could add more defenses to the compiled code automatically. Microsoft Research began work on analysis tools to find security bugs. I could feel a change in the company.

In October, I was asked by the .NET security team to look at some security bugs they had found. Because of how great these findings were, we decided to pause development, equip everybody with the latest in security training, and go looking for more security bugs. A part of my job was to train the engineering staff and to triage bugs as they came in. We fixed bugs and added extra defenses to .NET and ASP.NET. This event was known as the “.NET Security Stand Down.”

Around the end of the Stand Down, I heard that Craig Mundie (who reported to Bill) was working on ‘something’ to move the company in a more security-focused direction. At the time, that’s all I knew.

In December 2001, Writing Secure Code finally came, and I was asked to present at a two-hour meeting with Bill Gates to explain the nuances of security vulnerabilities. At the end of the meeting, I gave him a copy of Writing Secure Code. The following Monday he emailed me to say he had read the book and loved it. A few days later, Craig Mundie shared what he had been thinking about. He wanted the company to focus on Security, Privacy, Reliability, and Business Practices. These became the four pillars of Trustworthy Computing. Bill was sold on it and this all led to the now-famous BillG Trustworthy Computing memo of January 2002.

—Michael Howard, Senior Principal Cybersecurity Consultant

The evolution of the Security Development Lifecycle

The Security Development Lifecycle (SDL) is around 20 years old now and has evolved significantly since its beginning with Windows. When we started to roll out the SDL across all products back then we often received criticism from teams that it was too Windows-centric. So, the first step was to make the SDL applicable to all teams—keeping the design goal of one SDL but understanding that requirements would vary based on features and product types. We shared our experiences and made the SDL public, followed by the release of tooling we developed including the Threat Modeling Tool, Attack Surface Analyzer (ASA), and DevSkim (these last two we published on GitHub as Open Source projects).

As Microsoft started to adopt agile development methodologies and build its cloud businesses, the SDL needed to evolve to embrace this new environment and paradigm. That meant major changes to key foundations of the SDL like the bug bar, our approach to threat modeling, and how tools are integrated into engineering environments. It also presented new challenges in keeping to the one SDL principle while realizing that cloud environments are very different from the on-premises software we had traditionally shipped to customers.

We have embraced new technologies such as IoT and made further adaptions to the SDL to handle non-Windows operating systems such as Linux and macOS. A huge change was Microsoft’s adoption of Open Source which extended the need for SDL coverage to many different development environments, languages, and platforms. More recently we have incorporated new SDL content to cover the development of Artificial Intelligence and Machine Learning solutions which bring a whole new set of attack vectors.

The SDL has evolved and adapted over the last 20 years but it remains, as always, one SDL.

—Mark Cartwright, Security Group Program Manager

Securing Windows

I started my career at Microsoft as a pen tester in Windows during one of the first releases to fully implement the SDL. I cherish that experience. Every day it felt like I was on the front lines of security. We had an incredible group of people—from superstar pen testers to superstar developers all working together to implement a security process for one of the world’s largest security products. It was a vibrant time and one of the first times I saw a truly cross-disciplinary team of security engineers, developers, and product managers all working together toward a common goal. This left a long-lasting and powerful impression on me personally and on the Windows security culture.

For me, the key lesson learned from Trustworthy Computing is that good security is a byproduct of good engineering. In my naïve view before this experience, I assumed that the best way to get security in a product is to keep hiring security engineers until security improves. In reality, that approach is not possible. There will never be enough scale with security engineers and simply put good security requires engineering expertise that pen testing alone cannot achieve.

—David Weston, Partner Director of OS Security and Enterprise

An ever-changing industry

The security industry is amazing in that it never stops changing. What’s even more amazing to me is that the core philosophies of the Trustworthy Computing initiative have continued to hold true—even during 20 years of drastic change.

Compilers are a great foundational example of this.

In the early days of the Trustworthy Computing initiative, Microsoft and the broader security industry explored groundbreaking features to protect against buffer overflows, including StackGuard, ProPolice, and the /GS flag in Microsoft Visual Studio. As attacks evolved, the guiding principles of Trustworthy Computing led to Microsoft continuously evolving the foundational building blocks of secure software as well: Data Execution Protection (DEP), Address Space Layout Randomization (ASLR), Control-flow Enforcement Technology (CET) to defend against Return-Oriented Programming (ROP), and speculative execution protections, just to name a few.

Just by compiling software with a few switches, everyday developers could protect themselves against entire classes of exploits. Matt Miller gives a fascinating overview of this history in his BlueHat Israel talk.

At a higher level, one of the things that I’ve been happiest to see change is the evolution away from security absolutism.

In 2001, there was a lot of energy behind the “10 Immutable Laws of Security”, including several variants of “If an attacker can run a program on your computer, it’s not your computer anymore”.

The real world, it turns out, is shades of grey. The landscape has evolved, and it’s not game over until defenders say it is.

We have a rich industry that continually innovates around logging, auditing, forensics, incident response, and have evolved our strategies to include Assume Breach, Defense in Depth, “Impose Cost”, and more. For example: as dynamic runtimes have come of age (PowerShell, Python, C#), those that have evolved during the Trustworthy Computing era have become truly excellent examples of software that actively tilts the field in favor of defenders.

While you may not be able to prevent all attacks, you can certainly make attackers regret using certain tools and regret landing on your systems. For a great overview of PowerShell’s journey, check out Defending Against PowerShell Attacks—PowerShell Team.

When we launched the Trustworthy Computing effort, we never could have imagined the complexity of attacks the industry would be fending off in 2022—nor the incredible capability of Blue Teams defending against them. But by constantly refining and improving security as threats evolve, the world is far more secure today than it was 20 years ago.

—Lee Holmes, Principal Security Architect, Azure Security

The cloud is born

The TWC initiative and the SDL that it created recognized that security is a fundamental pillar of earning and keeping customer trust—so must be infused into all of Microsoft’s product development.

Since it was created, however, software has evolved from physical packages that Microsoft offers for customers to install, configure, and secure—to now include cloud services that Microsoft fully deploys and operates on behalf of customers. Microsoft’s responsibility to customers now includes not just developing secure software—but also operating it in a secure manner.

It also extends to ensuring that services and operational practices meet customer privacy promises and government privacy regulations.

Microsoft Azure leveraged the SDL framework and Trustworthy Computing principles from the very beginning to incorporate these additional aspects of software security and privacy. Having this foundation in place meant that instead of starting from scratch, we could enhance and extend the tools and processes that were already there for box-product software. Tools and processes like Threat Modeling and static and dynamic analysis were incredibly useful all the way to cloud scenarios like hostile multi-tenancy and DevOps.

As we created, validated, and refined, we and other Microsoft cloud service teams contributed back to the SDL and tooling—including publishing many of these for use by our customers. It’s not an understatement to say that Microsoft Azure’s security and privacy traces its roots directly back to the TWC initiative launch 20 years ago.

The cloud is constantly changing with the addition of new application architectures, programming models, security controls, and technologies like confidential computing. Static analysis tools like CodeQL provide better detections and CI/CD pipeline checks like CredScan help prevent entirely new forms of vulnerabilities that are specific to services.

At the same time, the threat landscape continues to get more sophisticated. Software that does not necessarily follow SDL processes is now a critical part of every company’s supply chain.

Just as the SDL today is much more sophisticated and encompasses far more aspects of the software lifecycle than it did 20 years ago, Microsoft will continue to invest in the SDL to address tomorrow’s software lifecycle and threats.

—Mark Russinovich, Chief Technology Officer and Technical Fellow, Microsoft Azure

An amazing community of researchers

The introduction of the Trustworthy Computing initiative coincided with my first serious forays into Windows security research. For that reason, it has defined how I view the problems and challenges of information security, not just on Windows but across the industry. Many things that I take for granted, such as security-focused development practices or automatic updates were given new impetus from the expectations laid down 20 years ago.

The fact that I’m still a Windows security researcher after all this time might give you the impression that the TwC initiative failed, but I think that’s an unfair characterization. The challenges of information security have not been static because the computing industry has not been static. Few would have envisaged quite how pervasive computing would be in our lives, and every connected endpoint can represent an additional security risk.

For every security improvement a product makes, there’s usually a corresponding increase in system complexity which adds an additional attack surface. Finding exploitable bugs is IMO definitely harder than it was 20 years ago, and yet there are more places to look. No initiative is likely to be able to remove all security bugs from a product, at least not in anything of sufficient complexity.

I feel the lasting legacy of the TwC initiative is not that it brought in a utopia of utmost security, regular news reports make it clear we’re not there yet. Instead, it brought security to the forefront, enabling it to become a first-class citizen in the defining industry of the 21st century.

—James Forshaw, First Bluehat Mitigation Bounty Winner

What I learned about Threat Intelligence from Trustworthy Computing

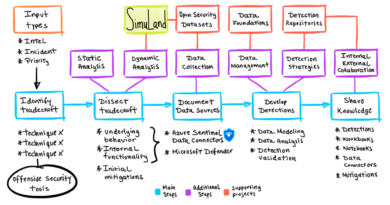

I spent 10 years at Microsoft in Trustworthy Computing (TwC). I remember being at the meeting with Bill Gates where we talked about the need for a memo on security. From the Windows security stand-down, to XP SP2, to the creation of the Security Development Lifecycle and driving it across every product, to meeting security researchers all over the world and learning from their brilliance and passion, the Trustworthy Computing initiative shaped my entire career. One aspect of security that carries forward with me to this day is about the attacks that take place. Spending time finding and fixing security bugs leads to the world of zero-day exploits and the attackers behind them. Today I run the Microsoft Threat Intelligence Center (MSTIC) and our focus is uncovering attacks by actors all over the globe and what we can do to protect customers from them.

One thing I took from my time in TwC was how important community is. No one company or organization can do it alone. That is certainly true in threat intelligence. It often feels like we hear about attacks as an industry, but defend alone. Yet when defenders work together, something amazing happens. We contribute our understanding of an attack from our respective vantage points and the picture suddenly gets clearer. Researchers contribute new attacker techniques to MITRE ATT&CK building our collective understanding. They publish detections in the form of Sigma and Yara rules, making knowledge executable. Analysts can create Jupyter notebooks so their expert analysis becomes repeatable by other defenders. A community-based approach can speed all defenders.

While much of my work in TwC was focused inward on Microsoft and the engineering of our products and services, today’s attacks really put customers and fellow defenders at the center. Defense is a global mission and I am excited and hopeful about the opportunity to work on today’s most challenging problems with the world’s defenders.

—John Lambert, Distinguished Engineer, Microsoft Threat Intelligence Center

Learn more

To learn more about Microsoft Security solutions visit our website. Bookmark the Security blog to keep up with our expert coverage on security matters. Also, follow us at @MSFTSecurity for the latest news and updates on cybersecurity.

READ MORE HERE