Gaps in Azure Service Fabric’s Security Call for User Vigilance

Cloud

In this blog post, we discuss different configuration scenarios that may lead to security issues with Azure Service Fabric, a distributed platform for deploying, managing, and scaling microservices and container applications.

Besides being known for deployment of containerized applications, many also know Kubernetes for container orchestration. However, it’s not the only platform that offers this service in the market. In this blog post, we will focus on Service Fabric, an orchestrator developed by Microsoft and available as a service inside the Azure cloud. As with our previous posts on Kubernetes, we will look into different configuration scenarios that may lead to security issues with this service.

Azure Service Fabric is a distributed platform for deploying, managing, and scaling microservices and container applications. It is available for Windows and Linux platforms, providing multiple options for application deployment. Azure offers two types of Service Fabric services: managed and not managed. Service Fabric’s managed service puts the responsibility for the configuration and maintenance of nodes on the cloud service provider. With a traditional cluster, the user must maintain the nodes on their own; they are responsible for its proper configuration and deployment settings.

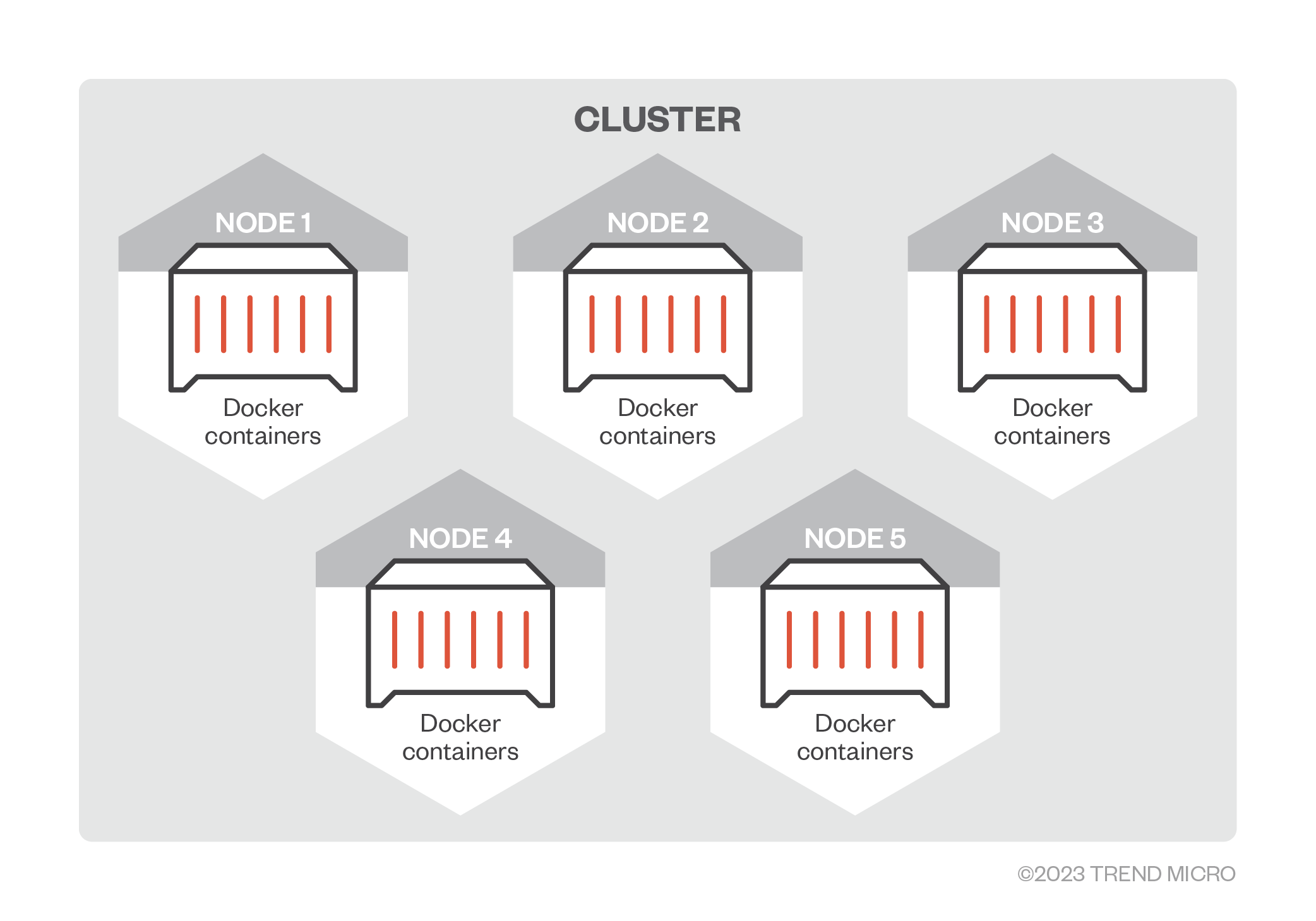

Service Fabric uses virtual machines (VMs) as cluster nodes that are running Docker as a container engine, together with Service Fabric-related services (Figure 1). The deployed applications are executed inside a container. In this entry, we will focus on the implementation of Service Fabric on the Linux operating system, Ubuntu 18.04.

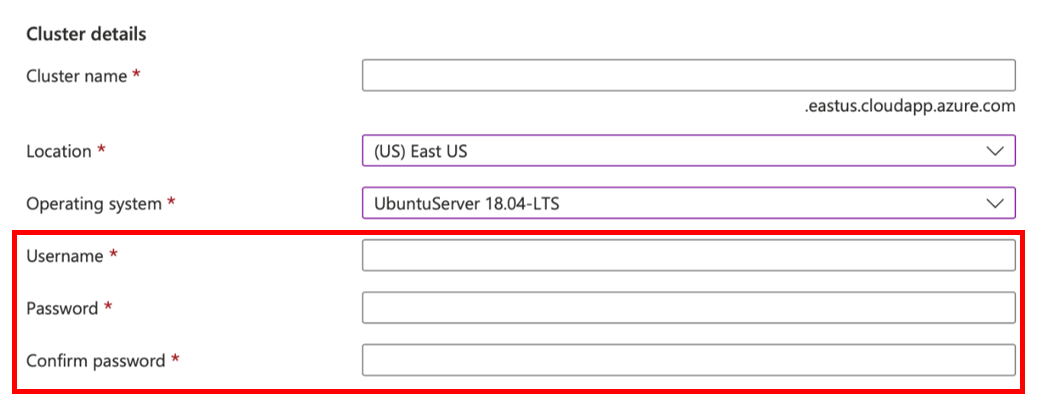

Creating a Service Fabric cluster (Figure 2) requires a username and password, among other fields. These credentials are used to access a node.

Deploying applications

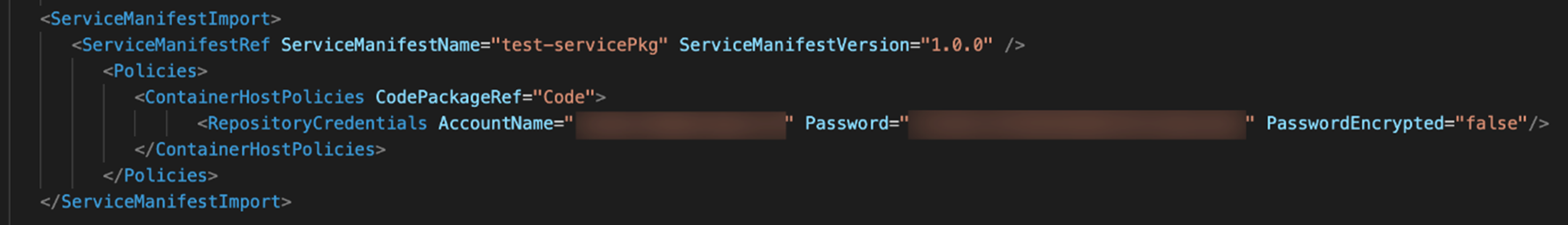

A script generated using Service Fabric’s official command-line interface (CLI) is used for application deployment. The configuration itself is saved inside ServiceManifest and ApplicationManifest XML files. This could include a repository of credentials for getting data like container images, port exposure settings, and isolation modes (Figure 3).

Client certificate

To establish communication with the cluster, a user must authenticate using a client certificate that is generated for the cluster. This certificate is used for accessing the dashboard and deploying CLI applications. It is essential to ensure confidentiality of this certificate, as its exposure would compromise the full cluster.

To model threat scenarios, we simulated a user code vulnerability that compromises the container and spawns a reverse shell. This can be considered a simulation of lateral movement that potential attackers could perform. Following a security mindset with Zero Trust policies and an Assume Breach paradigm, we should emphasize quoted paragraph from Azure’s documentation:

“A Service Fabric cluster is single tenant by design and hosted applications are considered trusted. Applications are, therefore, granted access to the Service Fabric runtime, which manifests in different forms, some of which are: environment variables pointing to file paths on the host corresponding to application and Fabric files, host paths mounted with write access onto container workloads, an inter-process communication endpoint which accepts application-specific requests, and the client certificate which Fabric expects the application to use to authenticate itself.”

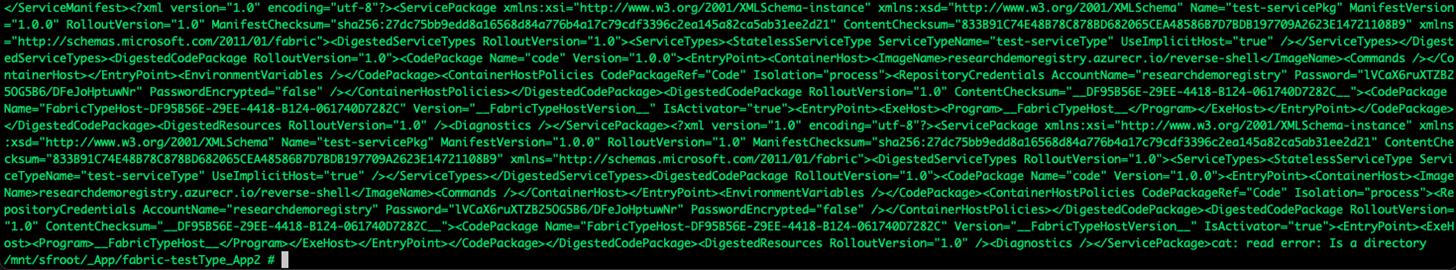

This, of course, contradicts the Assume Breach paradigm. The presence of sensitive information was confirmed: During our container environment analysis, we noticed the presence of several read-only mounts containing readable information about the cluster, one of which included credentials used to log into the container registry (Figure 4).

Notably, the selected isolation used for container deployment was the default process isolation and no mitigation policies were applied, as we were relying on minimal default settings. This credentials leak would provide us with access to the linked private container repository; it would enable us to pull all of the container images present, or update the packages and compromise services. Hence, this scenario will be dependent on user roles and permissions settings within the linked container registry.

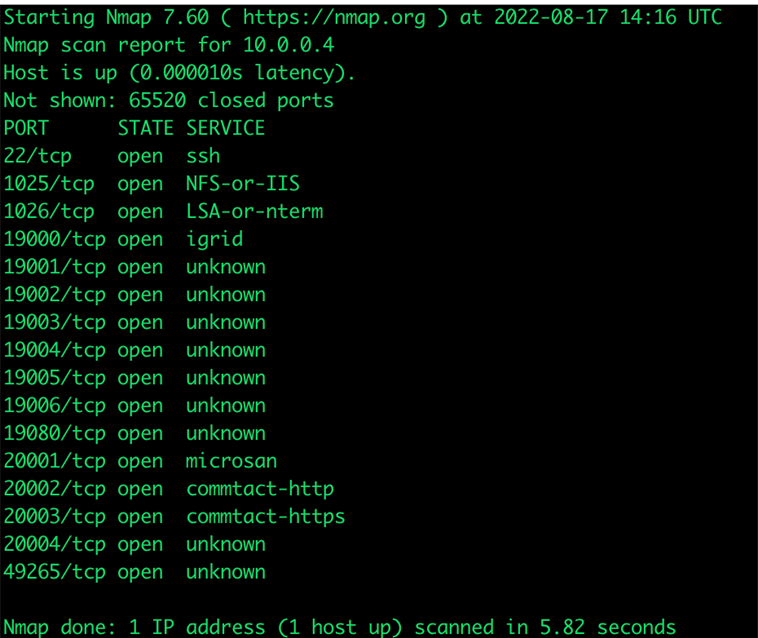

After these initial findings, we switched our focus to the available network, as our container had internet access by default. Further exploration revealed that we had network access to the node, allowing us to perform a port scan on it (Figure 5).

We could see that Secure Shell (SSH) port 22 is open, running at the node of our compromised container simulation. The SSH was configured to accept public key and password authentication. The set username and password were the same as the credentials we previously used for cluster creation, allowing us to log in to the cluster node with root permission.

In a real-world scenario, an attacker would likely not know our password. However, because default password authentication is used, they would still be able to run brute-force and dictionary attacks to try to guess the password. At this stage, we would expect that key pair authentication is allowed on the node only. As the user is responsible for managing the cluster, we recommend setting this manually by accessing the node.

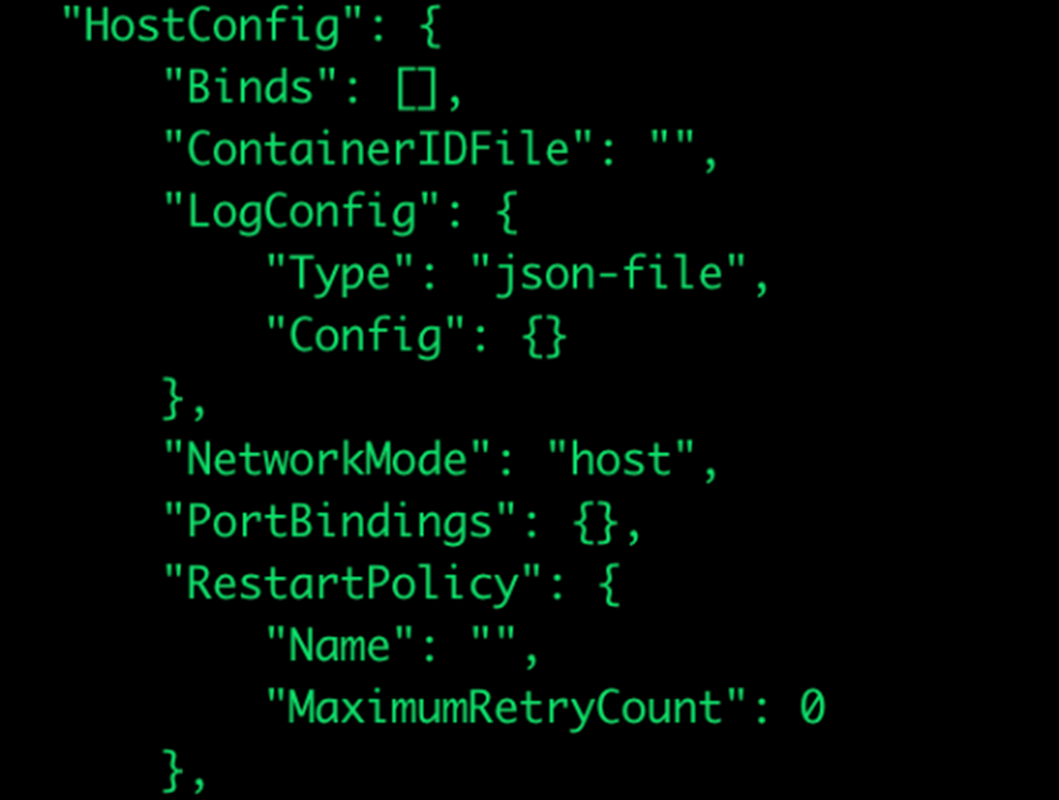

This functionality allowed us to access the node from the container, and after further investigation, we found out the Docker is used as a container engine and the default network mode is set to the host (Figure 6).

According to official Docker documentation: “If you use the host network mode for a container, that container’s network stack is not isolated from the Docker host (the container shares the host’s networking namespace), and the container does not get its own IP-address allocated.”

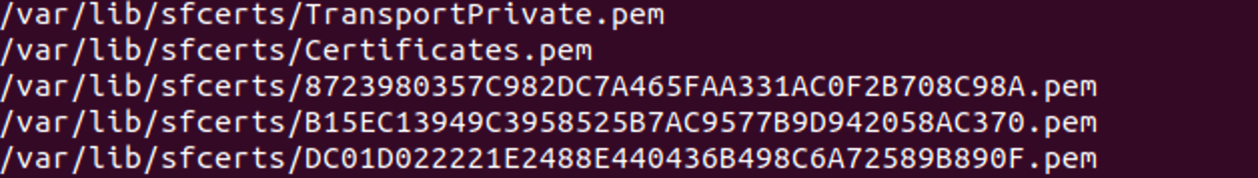

This has implications from a security perspective, as sharing the IP address with the host, together with non-restrictive firewall settings, makes the node (10.0.0.4) reachable from container by default. The node itself contains sensitive cluster information, such as the cluster certificate that allows us to get control over the whole cluster (Figure 7).

Mitigation and security hardening

Mitigating attacks and limiting the attack vectors of bad actors are the foundations of securing IT systems, so encrypting communication and forcing authorization for accessing sensitive content are a must. However, as our research has shown, applications can be poorly configured and default deployments may not be inherently secure by default. Knowing this, users should be especially careful when securing application deployments.

Fortunately, Service Fabric allows us to address some of these issues using appropriate policies, which are defined within the ApplicationManifest file. For instance, setting ServiceFabricRuntimeAcessPolicy with the attribute RemoveServiceFabricRuntimeAccess to ‘true’ removes the /mnt/sfroot/ mount from the deployed application container; this prevents sensitive information that’s stored there from leaking in the event of compromise.

On the other hand, we were unable to use networking policies to limit network access to the node from the container. It is worth mentioning that some of the settings are also not available on Linux hosts, as shown in Figure 8.

We were also unable to set Hyper-V process isolation for containers running on Linux hosts. Our most significant finding, in which we demonstrated how we were able to guess credentials and gain access to the node, may be mitigated by manually configuring public key cryptography access only and generating appropriate key pairs.

Given these facts, we began to explore scenarios with more serious implications, such as container escape. We evaluated the possibility of:

- Exploiting unpatched container engine vulnerabilities (like CVE-2019-5736)

- Exploiting unpatched Service Fabric vulnerabilities (like CVE-2022-30137)

- Exploiting isolation vulnerabilities, like:

- Kernel vulnerabilities, in case of process isolation

- Hypervisor vulnerabilities, in case of virtualization

- Exploiting a misconfiguration or a design flaw

As most of our options seemed unlikely, and given the previous access to the node, we switched our focus to analysis of the node. This led to our discovery of CVE-2023-21531, allowing us to gain cluster access from a container.

Conclusion

Users should realize that their usage of cloud services doesn’t delegate security fully to their cloud service provider (CSP): Depending on the service, some configuration is necessary on the user’s end, leaving room for misconfigurations and unprotected deployments. Security comes with a price that doesn’t end with paying for CSPs; it also calls for a proactive security mindset and enforcing security practices, such as:

Following best practices that are specific to Service Fabric and container registries will help mitigate any emerging security issues. However, some applications might not be designed with Zero Trust policies in mind, so additional manual configuration and security hardening from the user may be required.

Tags

sXpIBdPeKzI9PC2p0SWMpUSM2NSxWzPyXTMLlbXmYa0R20xk

Read More HERE