Google Accidentally Published Internal Search Docs To GitHub

Google apparently accidentally posted a big stash of internal technical documents to GitHub, partially detailing how the search engine ranks webpages. For most of us, the question of search rankings is just “are my web results good or bad,” but the SEO community is both thrilled to get a peek behind the curtain and up in arms since the docs apparently contradict some of what Google has told them in the past. Most of the commentary on the leak is from SEO experts Rand Fishkin and Mike King.

Google confirmed the authenticity of the documents to The Verge, saying, “We would caution against making inaccurate assumptions about Search based on out-of-context, outdated, or incomplete information. We’ve shared extensive information about how Search works and the types of factors that our systems weigh, while also working to protect the integrity of our results from manipulation.”

The fun thing about accidentally publishing to the GoogleAPI GitHub is that, while these are sensitive internal documents, Google technically released them under an Apache 2.0 license. That means anyone who stumbled across the documents was granted a “perpetual, worldwide, non-exclusive, no-charge, royalty-free, irrevocable copyright license” to them, so these are freely available online now, like here.

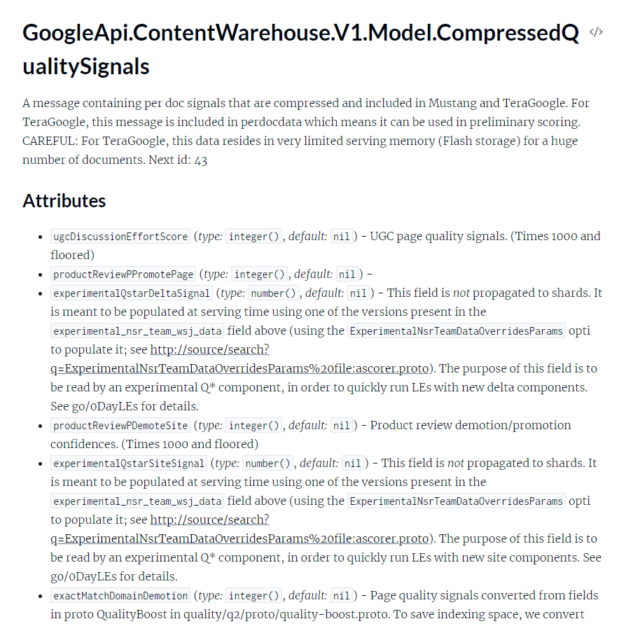

The leak contains a ton of API documentation for Google’s “ContentWarehouse,” which sounds a lot like the search index. As you’d expect, even this incomplete look at how Google ranks webpages is impossibly complex. King writes that there are “2,596 modules represented in the API documentation with 14,014 attributes (features).” These are all documents written by programmers for programmers and rely on a lot of background information that you’d probably only know if you worked on the search team. The SEO community is still poring over the documents and using them to build assumptions on how Google Search works.

Both Fishkin and King accuse Google of “lying” to SEO experts in the past. One of the revelations in the documents is that the click-through rate of a search result listing affects its ranking, something Google has denied goes into the results “stew” on several occasions. The click tracking system is called “Navboost,” in other words, boosting websites users navigate to. Naturally, a lot of this click data comes from Chrome, even when you leave search. For instance, some results can show a small set of “sitemap” results below the main listing, and apparently a part of what powers this is the most-popular subpages as determined by Chrome’s click tracking.

The documents also suggest Google has whitelists that will artificially boost certain websites for certain topics. The two mentioned were “isElectionAuthority” and “isCovidLocalAuthority.”

A lot of the documentation is exactly how you would expect a search engine to work. Sites have a “SiteAuthority” value that will rank well-known sites higher than lesser known ones. Authors also have their own rankings, but like with everything here, it’s impossible to how know everything interacts with everything else.

Both bits of commentary from the SEO experts make them sound offended that Google would ever mislead them, but doesn’t the company need to maintain at least a slightly adversarial relationship with the people who try to manipulate the search results? One recent study found that “search engines seem to lose the cat-and-mouse game that is SEO spam” and found “an inverse relationship between a page’s optimization level and its perceived expertise, indicating that SEO may hurt at least subjective page quality.” None of this additional documentation is likely great for users or Google’s results quality. For instance, now that people know that the click-through rate affects search ranking, couldn’t you boost a website’s listing with a click farm?

READ MORE HERE