New machine learning model sifts through the good to unearth the bad in evasive malware

We continuously harden machine learning protections against evasion and adversarial attacks. One of the latest innovations in our protection technology is the addition of a class of hardened malware detection machine learning models called monotonic models to Microsoft Defender ATP‘s Antivirus.

Historically, detection evasion has followed a common pattern: attackers would build new versions of their malware and test them offline against antivirus solutions. They’d keep making adjustments until the malware can evade antivirus products. Attackers then carry out their campaign knowing that the malware won’t initially be blocked by AV solutions, which are then forced to catch up by adding detections for the malware. In the cybercriminal underground, antivirus evasion services are available to make this process easier for attackers.

Microsoft Defender ATP’s Antivirus has significantly advanced in becoming resistant to attacker tactics like this. A sizeable portion of the protection we deliver are powered by machine learning models hosted in the cloud. The cloud protection service breaks attackers’ ability to test and adapt to our defenses in an offline environment, because attackers must either forgo testing, or test against our defenses in the cloud, where we can observe them and react even before they begin.

Hardening our defenses against adversarial attacks doesn’t end there. In this blog we’ll discuss a new class of cloud-based ML models that further harden our protections against detection evasion.

Most machine learning models are trained on a mix of malicious and clean features. Attackers routinely try to throw these models off balance by stuffing clean features into malware.

Monotonic models are resistant against adversarial attacks because they are trained differently: they only look for malicious features. The magic is this: Attackers can’t evade a monotonic model by adding clean features. To evade a monotonic model, an attacker would have to remove malicious features.

Monotonic models explained

Last summer, researchers from UC Berkeley (Incer, Inigo, et al, “Adversarially robust malware detection using monotonic classification”, Proceedings of the Fourth ACM International Workshop on Security and Privacy Analytics, ACM, 2018) proposed applying a technique of adding monotonic constraints to malware detection machine learning models to make models robust against adversaries. Simply put, the said technique only allows the machine learning model to leverage malicious features when considering a file – it’s not allowed to use any clean features.

Figure 1. Features used by a baseline versus a monotonic constrained logistic regression classifier. The monotonic classifier does not use cleanly-weighted features so that it’s more robust to adversaries.

Inspired by the academic research, we deployed our first monotonic logistic regression models to Microsoft Defender ATP cloud protection service in late 2018. Since then, they’ve played an important part in protecting against attacks.

Figure 2 below illustrates the production performance of the monotonic classifiers versus the baseline unconstrained model. Monotonic-constrained models expectedly have lower outcome in detecting malware overall compared to classic models. However, they can detect malware attacks that otherwise would have been missed because of clean features.

Figure 2. Malware detection machine learning classifiers comparing the unconstrained baseline classifier versus the monotonic constrained classifier in customer protection.

The monotonic classifiers don’t replace baseline classifiers; they run in addition to the baseline and add additional protection. We combine all our classifiers using stacked classifier ensembles–monotonic classifiers add significant value because of the unique classification they provide.

How Microsoft Defender ATP uses monotonic models to stop adversarial attacks

One common way for attackers to add clean features to malware is to digitally code-sign malware with trusted certificates. Malware families like ShadowHammer, Kovter, and Balamid are known to abuse certificates to evade detection. In many of these cases, the attackers impersonate legitimate registered businesses to defraud certificate authorities into issuing them trusted code-signing certificates.

LockerGoga, a strain of ransomware that’s known for being used in targeted attacks, is another example of malware that uses digital certificates. LockerGoga emerged in early 2019 and has been used by attackers in high-profile campaigns that targeted organizations in the industrial sector. Once attackers are able breach a target network, they use LockerGoga to encrypt enterprise data en masse and demand ransom.

Figure 3. LockerGoga variant digitally code-signed with a trusted CA

When Microsoft Defender ATP encounters a new threat like LockerGoga, the client sends a featurized description of the file to the cloud protection service for real-time classification. An array of machine learning classifiers processes the features describing the content, including whether attackers had digitally code-signed the malware with a trusted code-signing certificate that chains to a trusted CA. By ignoring certificates and other clean features, monotonic models in Microsoft Defender ATP can correctly identify attacks that otherwise would have slipped through defenses.

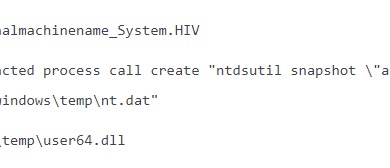

Very recently, researchers demonstrated an adversarial attack that appends a large volume of clean strings from a computer game executable to several well-known malware and credential dumping tools – essentially adding clean features to the malicious files – to evade detection. The researchers showed how this technique can successfully impact machine learning prediction scores so that the malware files are not classified as malware. The monotonic model hardening that we’ve deployed in Microsoft Defender ATP is key to preventing this type of attack, because, for a monotonic classifier, adding features to a file can only increase the malicious score.

Given how they significantly harden defenses, monotonic models are now standard components of machine learning protections in Microsoft Defender ATP‘s Antivirus. One of our monotonic models uniquely blocks malware on an average of 200,000 distinct devices every month. We now have three different monotonic classifiers deployed, protecting against different attack scenarios.

Monotonic models are just the latest enhancements to Microsoft Defender ATP’s Antivirus. We continue to evolve machine learning-based protections to be more resilient to adversarial attacks. More effective protections against malware and other threats on endpoints increases defense across the entire Microsoft Threat Protection. By unifying and enabling signal-sharing across Microsoft’s security services, Microsoft Threat Protection secures identities, endpoints, email and data, apps, and infrastructure.

Geoff McDonald (@glmcdona),Microsoft Defender ATP Research team

with Taylor Spangler, Windows Data Science team

Talk to us

Questions, concerns, or insights on this story? Join discussions at the Microsoft Defender ATP community.

Follow us on Twitter @MsftSecIntel.

READ MORE HERE