Norwegian Agency Dings Facebook, Google For “Unethical” Privacy Tactics

While GDPR is forcing large data-crushing service providers to be transparent around data collection and usage, some are still employing a number of tactics to nudge end users away from data privacy.

That’s what the Norwegian Consumer Council said in an in-depth report, released Wednesday, which says that providers like Facebook and Google are embedding “dark patterns,” a.k.a. exploitative design choices, into their interfaces — all in an effort to prompt users to share as much data as possible.

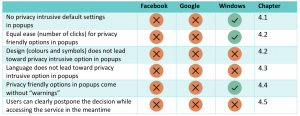

“The combination of privacy-intrusive defaults and the use of dark patterns nudge users of Facebook and Google, and to a lesser degree Windows 10, toward the least privacy friendly options — to a degree that we consider unethical,” the agency, which is a Norwegian government agency and consumer protection organization, said in a report.

“We question whether this is in accordance with the principles of data protection by default and data protection by design, and if consent given under these circumstances can be said to be explicit, informed and freely given,” the agency continued.

Under the GDPR law, E.U. citizens must retain a right to know what’s being done with their data, and a right to access it. GDPR requires any company doing business in the E.U. that interacts with and processes data of people to get explicit consent from users for every possible use to their data.

While Facebook and Google have been compliant with GDPR laws requiring them to notify end users about data usage and conditions, they are also taking steps to influence users to share more data – without breaking the rules.

The companies are using a psychological method called a “dark pattern,” which directs the user to subconscious actions that benefit the service provider, but which may not be in the users’ interests.

“This can be done in various ways, such as by obscuring the full price of a product, using confusing language, or by switching the placement of certain functions contrary to user expectations,” said the agency.

Facebook did not respond to a request for comment from Threatpost.

“We’ve evolved our data controls over many years to ensure people can easily understand, and use, the array of tools available to them,” a Google spokesperson told Threatpost via email. “Feedback from both the research community and our users, along with extensive UI testing, helps us reflect users’ privacy preferences. For example, in the last month alone, we’ve made further improvements to our Ad Settings and Google Account information and controls.”

Sneaky Tactics

In its Wednesday report, the agency said it inspected Facebook and Google’s services in April and May 2018, and walked through how it said Facebook and Google are misleading consumers about data privacy via their default settings, wording and images, and through the lack of ease for choosing privacy options.

One big issue was default settings — while GDPR mandates that default settings should not allow for more data collection or use of personal data than is required to provide the service, the agency said that it does not believe Facebook and Google’s methods for reviewing data settings under GDPR were private by default.

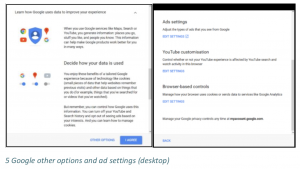

For both services, users must actively go to a page or privacy dashboard to turn off ads based on data from third parties. Facebook and Google also both have default settings that are pre-selected to “the least privacy friendly options,” the report said.

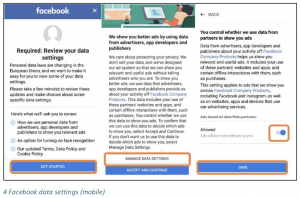

Facebook and Google both made the privacy options more cumbersome for end users looking to pick them out – for instance, in an option to Facebook users to accept facial recognition, the company offered a bright blue button enticing users to “Agree and Continue” – and a dull grey button for the alternative and seemingly more time-consuming option, “Manage Data Settings.”

In addition, the services would guide users toward making certain choices by focusing on the positive aspects of one choice, while glossing over any potentially negative aspects.

“All of the services nudge users toward accepting data collection through a combination of positioning and visual cues. However, Facebook and Google go further by requiring a significant larger amount of steps in order to limit data collection,” the agency concluded.

Finally both services employed a “take it or leave it” approach when looking at data-privacy policies for GDPR, the agency said.

On Facebook’s GDPR pop-up page for instance, if users click the box alternate to “I Accept,” it leads them to another choice – which says they can either go back and accept the terms, or delete their account.

Meanwhile, when attempting to opt out of personalized advertising in Google’s GDPR popup, users were told that they will lose the ability to block or mute some ads, the report said.

Facebook and Google have been under tightened scrutiny around data privacy – particularly after GDPR launched in May, and after Facebook underwent a large data-scraping scandal with Cambridge Analytica in March.

Jeannie Warner, security manager at WhiteHat Security, agreed that large service providers like Facebook are trying to walk a slippery slope of complying with GDPR while still being aware of – and taking advantage of – users’ statistics and behaviors.

“GDPR is important because it grants the right for citizen to make sure [their data] can be taken out of companies’ systems… and it’s entirely correct that [Facebook and Google] have created a system where it’s hard to do that,” she told Threatpost. “Developers aren’t privacy lawyers. They want users to keep sharing information.”

All images included inside the article are courtesy of the Norwegian Consumer Council.

READ MORE HERE