Researchers Show Alex Skill Squatting Could Hijack Voice Commands

The success of Internet of Things devices such as Amazon’s Echo and Google Home have created an opportunity for developers to build voice-activated applications that connect ever deeper—into customers’ homes and personal lives. And—according to research by a team from the University of Illinois at Urbana-Champaign (UIUC)—the potential to exploit some of the idiosyncrasies of voice-recognition machine-learning systems for malicious purposes has grown as well.

Called “skill squatting,” the attack method (described in a paper presented at USENIX Security Symposium in Baltimore this month) is currently limited to the Amazon Alexa platform—but it reveals a weakness that other voice platforms will have to resolve as they widen support for third-party applications. Ars met with the UIUC team (which is comprised of Deepak Kumar, Riccardo Paccagnella, Paul Murley, Eric Hennenfent, Joshua Mason, Assistant Professor Adam Bates, and Professor Michael Bailey) at USENIX Security. We talked about their research and the potential for other threats posed by voice-based input to information systems.

Its master’s voice

There have been a number of recent demonstrations of attacks that leverage voice interfaces. In March, researchers showed that, even when Windows 10 is locked, the Cortana “assistant” responds to voice commands—including opening websites. And voice-recognition-enabled IoT devices have been demonstrated to be vulnerable to commands from radio or television ads, YouTube videos, and small children.

That vulnerability goes beyond just audible voice commands. In a separate paper presented at USENIX Security this month, by Yuan Xuejing of China’s State Key Laboratory of Information Security, researchers demonstrated that audio embedded in a video soundtrack, music stream, or radio broadcast could be modified to trigger voice commands in automatic speech-recognition systems without being detected by a human listener. Most listeners couldn’t identify issues with the altered songs.

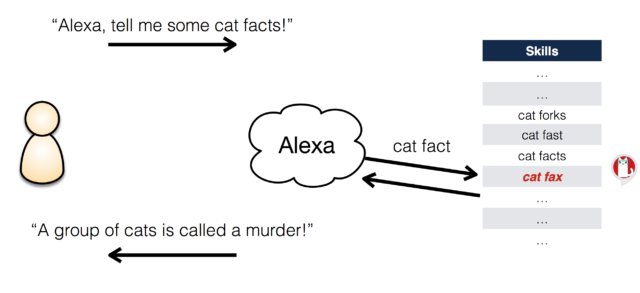

But skill-squatting attacks could pose a more immediate risk—it appears, the researchers found, that developers are already giving their applications names that are similar to those of popular applications. Some of these—such as “Fish Facts” (a skill that returns random facts about fish, the aquatic vertebrates) and “Phish Facts” (a skill that returns facts about the Vermont-based jam band)—are accidental, but others such as “Cat Fax” (which mimics “Cat Facts“) are obviously intentional.

Thanks to the way Alexa handles requests for new “skills”—the cloud applications that register with Amazon—it’s possible to create malicious skills that are named with homophones for existing legitimate applications. Amazon made all skills in its library available by voice command by default in 2017, and skills can be “installed” into a customer’s library by voice. “Either way, there’s a voice-only attack for people who are selectively registering skill names,” said Bates, who leads UIUC’s Secure and Transparent Systems Laboratory.

This sort of thing offers all kinds of potential for malicious developers. They could build skills that intercept requests for legitimate skills in order to drive user interactions that steal personal and financial information. These would essentially use Alexa to deliver phishing attacks (the criminal fraud kind, not the jam band kind). The UIUC researchers demonstrated (in a sandboxed environment) how a skill called “Am Express” could be used to hijack initial requests for American Express’ Amex skill—and steal users’ credentials.

Did I hear that right?

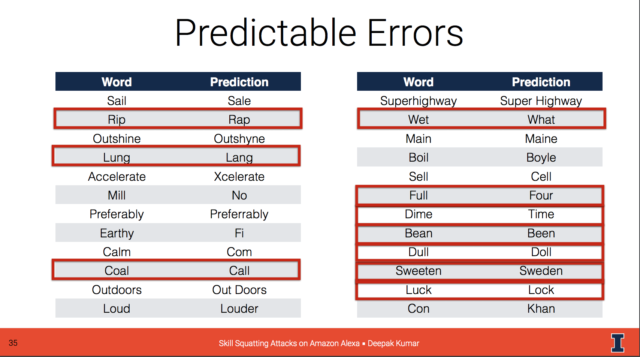

There are also words that Alexa commonly mistakes for other words, perhaps due to the accent of the voices Alexa’s machine-learning classifiers were trained with. To identify some of these, the UIUC team leveraged a corpus of audio samples from the Nationwide Speech Project. (This collection of speech samples from 60 different speakers from the six major dialect regions of the US was collected by linguistics researchers Cynthia Clopper (now at Ohio University) and David Pisoni.)

The collection used by the UIUC team consisted of 188 unique words spoken by each of the 60 speakers—a total of 11,460 audio samples. These samples were pushed to an Alexa skill built by the team called “Record This.” The skill transcribed the words to text using a client application that played the audio in batches and controlled the rate of submissions so as not to bombard the Alexa voice-processing server. “We sent each speech sample to Alexa 50 times,” said Kumar, “providing us 573,000 transcriptions across the 60 speakers.”

Out of the 188 words, the test identified 27 (14 percent) that would reliably be misinterpreted by Alexa as another word. Additionally, the research found particular words that Alexa misinterpreted for particular dialects and genders—meaning that these words could potentially be used to target attacks against a specific demographic.

The UIUC team shared its findings with Amazon’s security team. Ars contacted Amazon to see what was being done to fend off these sorts of attacks, and we received the following official statement in reply from an Amazon spokesperson: “Customer trust is important to us, and we conduct security reviews as part of the skill certification process. We have mitigations in place to detect this type of skill behavior and reject or remove them when identified.”

The UIUC researchers acknowledged there were limits on how far they could take their testing without actually publishing a skill in Alexa’s production library. But Prof. Bates said that it’s likely that solving the issue with misheard commands “isn’t an ‘oh, we push a patch and the problem goes away’ issue—it’s that we’re placing our trust in the machine-learning language-processing classifier, and all machine learning classifiers are going to make errors. So, swapping out a keyboard—an analog device that we trust—with machine learning, there’s going to be problems, and they’re going to pop up in more places than the skill lane.”

The UIUC team is looking at a number of possible next steps for research, including how Alexa’s voice-processing problem might impact various demographics differently. Some of the data from the research suggested that Alexa may not handle all speakers equally, but a larger set of spoken-word data would be required to get a true handle on the scope of that issue.

The researchers are also considering research topics around the impact of trust in Internet of Things devices. “If an attacker realizes that users trust voice interfaces more than other forms of computation,” they wrote in their paper, “they may build better, more targeted attacks on voice-interfaces.” And the team is hoping to explore the types of processing errors that exist on other language-processing platforms.

“As an interface problem,” Bates said, “there’s no bottom for this.”

READ MORE HERE