San Francisco Rolls Back Its Plan for Killer Robots

On Nov. 29, the San Francisco Board of Supervisors voted to allow the San Francisco Police Department (SFPD) to use armed robots for lethal force, a month after public outcry caused the police in nearby Oakland to rescind a similar request. A week later, the board voted again — this time to explicitly deny permission for deadly force and send the original bill back to committee. Many questions about that original bill remain unanswered, but one obvious one is: If we allow civilian agencies to arm robots, how do we guard against misuse — by police themselves, yes, but also by unauthorized users?

Security researchers Cesar Cerrudo and Lucas Apa tackled those questions as IOActive consultants back in 2017. The issues are still prevalent today, according to Cerrudo, now an independent cybersecurity researcher.

“As any piece of technology, robots could be hacked; it could be easy or extremely difficult, but possibilities are there,” he tells Dark Reading. “In order to reduce hacking possibilities, robot technology has to be built with security in mind from the very beginning and then all the robot technology properly audited to identify all potential vulnerabilities and to eliminate them.”

The categories of vulnerabilities Cerrudo and Apa discovered included insecure communications, authentication issues, missing authorization, weak cryptography, privacy concerns, insecure default configurations, and easily probed open source frameworks.

While the researchers didn’t test military or police robots, such machines are likely to be similarly vulnerable. “These robots are some of the most dangerous when hacked, since they are often used to manipulate dangerous devices and materials, such as guns and explosives,” they wrote in the paper.

One example of the scale of the danger is a 2007 failure in an automatic anti-aircraft cannon in South Africa that killed nine soldiers and wounded a dozen more in a span of 0.8 seconds. Now imagine what would happen if someone successfully turned an armed police robot back toward the officers or toward the public.

The possibility that robots can be hacked is not so far-fetched. Last year hacktivists gained access to a network of 150,000 surveillance cameras, including those inside of prisons, by obtaining the super admin account information. Hackers have successfully seized control of a Jeep Cherokee driving down a highway and grabbing control of other cars via a key fob hack that has gone mainstream. Even entire buildings have been hacked.

Taking Back Permission to Kill

“I simply do not feel arming robots and giving them license to kill will make us safer,” said Supervisor Eric Mar on Dec. 6 before voting to send the original bill back to committee.

On Nov. 29, the board had voted 8-3 to approve the bill allowing deadly force; on Dec. 6, the vote was 8-3 to rescind it. “It was a rare step,” the San Francisco Chronicle reported. “The board’s second votes on local laws are typically formalities that don’t change anything.”

What does change things in San Francisco is public protest. “We’ve all heard from a lot of constituents … on the idea that we as a city will have robots deliver deadly force,” said Supervisor Dean Preston at the Dec. 6 meeting.

Three of the supervisors — Shamann Walton (also board president), Hillary Ronen, and Preston — attended one such protest at city hall on Monday, organized by the Quakers, where a crowd held placards reading “no killer robots.”

“We will fight this legislatively at the board, we will fight this in the streets and on public opinion, and if necessary, we will fight this at the ballot,” Preston said. That last threat might have been the most persuasive; city voters are not afraid to govern directly through ballot initiatives.

The SFPD has about a dozen robots purchased between 2010 and 2017, the department said in a statement.

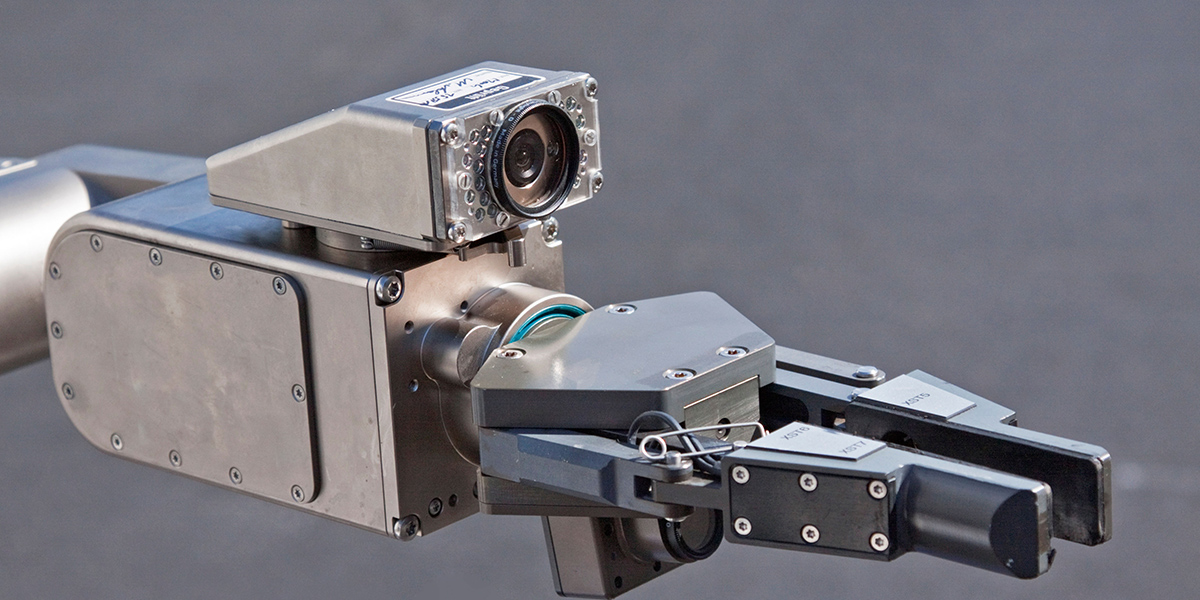

“The robots are remotely controlled and operated by SFPD officers who have undergone specialized training,” according to the statement. “Our robots are primarily used in EOD/bomb situations, hazardous materials incidents, and other incidents where officers may need to keep a safe distance before rendering a scene secure.”

The SFPD statement continued: “In extreme circumstances, robots could be used to deliver an explosive charge to breach a structure containing a violent or armed subject. The charge would be used to incapacitate or disorient a violent, armed, or dangerous subject who presents a risk of loss of life,” although such use “could potentially cause injury or be lethal.”

While the Dec. 6 vote was a setback for the SFPD, the San Francisco Standard pointed out that the issue isn’t necessarily finalized. The rules committee might be able to sit on it long enough that public attention fades, or they could come up with language that lets a majority of supervisors return their support. But for now, murderbots are explicitly forbidden, and it seems decided.

Not the First Robo-Cops

Unmanned aerial vehicles (UAV) have been part of military tactics for half a century, and there are several examples of police departments using remotely operated drones. In 2015, North Dakota legalized arming drones with less-than-lethal weapons like tasers. Of course, tasers can be lethal, and drones are notoriously vulnerable to hacking.

Another prominent police use of unmanned robotics was the NYPD’s failed Digidog experiment in 2020-2021, which sent remote-controlled Boston Dynamics robots into dangerous situations, such as standoffs and hostage situations, to minimize risk to personnel. Public reaction to the spidery, fast-moving, stair-climbing robots was so negative, however, that the department ended the contract early.

Proponents of unmanned mobile equipment usually base the technology’s mission on saving lives, whether of hostages or police. Indeed, the SFPD’s statement asserted, “Robots equipped in this manner would only be used to save or prevent further loss of innocent lives.” We have already seen how that plays out in real life.

Police Robots Have Already Killed

American police have already used a robot to deliver lethal force. Dallas PD sent a robot rigged with explosives to kill Micah Johnson back in 2016, ending a standoff after Johnson shot 12 Dallas officers, killing five. A grand jury cleared the police of any wrongdoing in that incident, where the police used the ruse of sending the shooter a phone to get the robot close enough. Some of the SFPD’s dozen existing robots are “wheeled bomb-disposal robots with extending arms similar to the one used by Dallas officers in 2016,” according to the Washington Post.

After that 2016 Dallas use of force, UC Davis law professor Elizabeth E. Joh wrote in the New York Times: “And a robot is unlike a gun in that a gun may misfire, but it can’t be hacked. The market for police robots is emerging, but we as a society — and that includes the police — should be wary of any armed police robot that is vulnerable to takeover by third parties. Experience with the security of electronic devices doesn’t inspire confidence: If third parties can hack cars or toy drones, they can certainly hack police robots.”

Cerrudo’s assessment aligns with that warning.

“If I were to deploy these robots, I would make sure that they were built with security in mind and that they have been properly security-audited by experts,” he says. “Failing to do this is exposing the robots to possible cyberattacks that could turn a robot into a dangerous weapon.”

Read More HERE