New machine learning model sifts through the good to unearth the bad in evasive malware

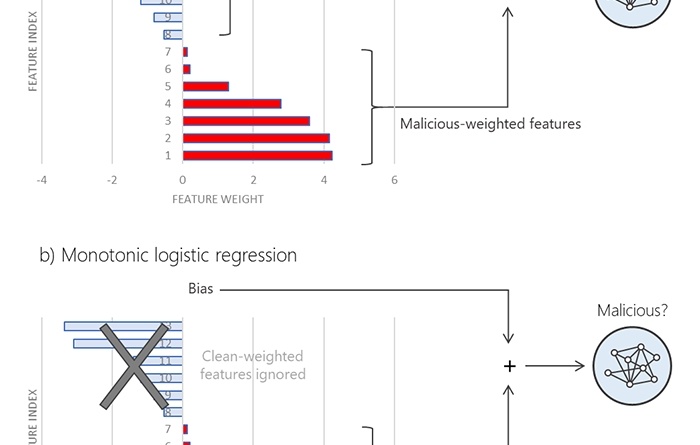

Most machine learning models are trained on a mix of malicious and clean features. Attackers routinely try to throw these models off balance by stuffing clean features into malware. Monotonic models are resistant against adversarial attacks because they are trained differently: they only look for malicious features. The magic is this: Attackers can’t evade a monotonic model by adding clean features. To evade a monotonic model, an attacker would have to remove malicious features.

The post New machine learning model sifts through the good to unearth the bad in evasive malware appeared first on Microsoft Security. READ MORE HERE…