Ultrasonic Waves Can Make Siri Share Your Secrets

Researchers at the Washington University in St. Louis have discovered a new method of attacking mobile devices via inaudible voice assistant commands, using a novel method that’s effective from up to 30 feet away. While the attack requires such a specific environment that we’re unlikely to see it used much in the wild, it nevertheless represents a previously unknown vulnerability that affects virtually all mobile devices, including all iPhones running Siri and a plethora of Android devices running Google Assistant.

The research team presented its full report on the vulnerability at the Network and Distributed System Security Symposium on February 24, and has subsequently published a summary on the university website. The gist of the main finding is that these voice assistant programs listen to a frequency far wider than the human voice is capable of producing, and thus can be fed ultrasonic waves that will be interpreted as voice commands while remaining completely inaudible to the human ear.

As part of their study, the researchers set up a variety of scenarios in which this attack method could be used to steal information from the target device. All versions of the attack shared a common premise and required the same laundry list of specialized tools: a piece of software that can produce the right waveforms, an ultrasonic generator to output the signal, a piezoelectric transducer (a device that turns an electrical signal into physical vibrations), and a hidden microphone to listen for the voice assistant’s response.

The most obviously compromising version of the attack involves using these inaudible queries to ask the voice assistant to set the phone’s volume to a very low level, then read aloud the contents of a text message containing a two-factor authentication code. In theory, this could be done in a way that leaves the phone’s owner completely unaware of the attack, as the voice assistant reads the text at a volume that gets lost in the background noise of an office or public space but can nonetheless be picked up by the hidden microphone.

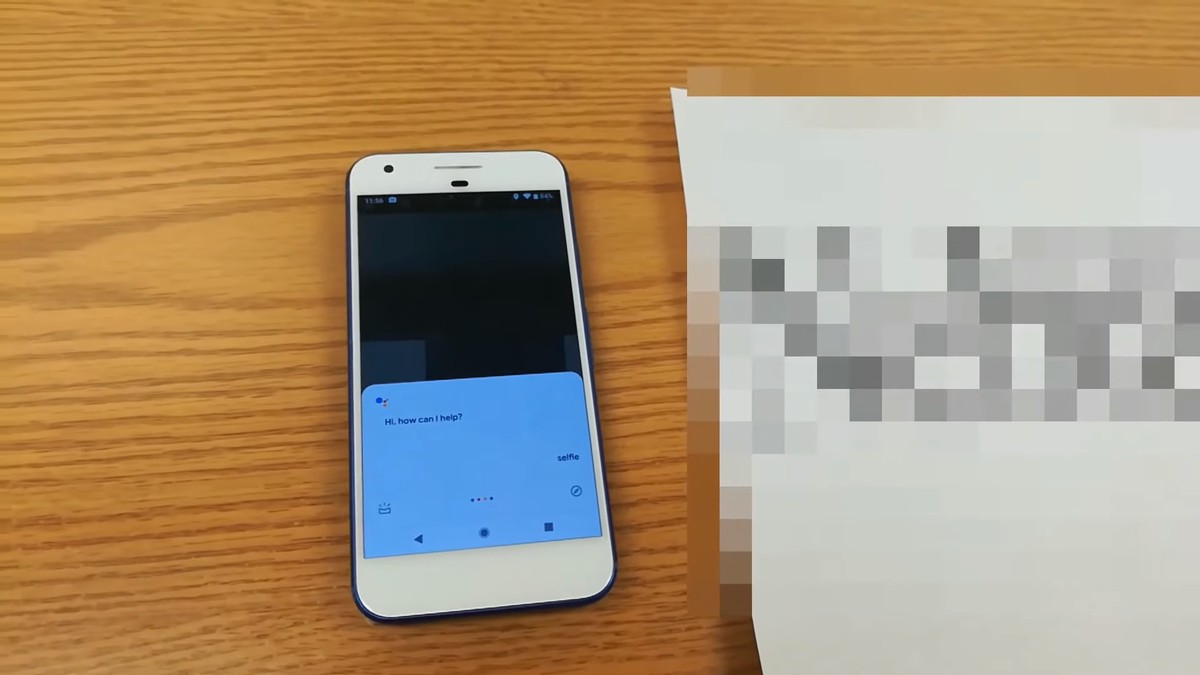

In order to perform the attack at an inconspicuous distance, the researchers conducted the ultrasonic signal through a hard surface upon which the target device was placed. They found that the process worked through up to thirty feet of metal, glass or wood, but softer materials such as a tablecloth foiled the attack by not conducting the signal with a sufficient degree of fidelity.

The idea of an attacker being able to sit down 30 feet away from you and read all your text messages sounds quite terrifying, but it’s important to keep in mind the improbability of becoming the victim of an attack like this in the real world. As mentioned above, the attack requires some fairly specialized equipment, some of which is fairly odd-looking and cumbersome, rendering it hard to deploy in a public space without attracting suspicion. Furthermore, it requires a very particular physical arrangement wherein the target device is out of line of sight from the attacker while still being coupled to a hard surface upon which the attacker can deploy the required gadgetry.

“I don’t think we will see such an attack a lot in common places, but probably used for a more targeted attack,” says assistant professor Ning Zhang, who led the research team. He also warned that while the hardware they use in their demonstration is unwieldy, there are alternatives; “The signal generator can be expensive and bulky, but you can design your own hardware to generate certain signals … depending on how DIY you want to do. A cell phone will also do, if bulkyness is the main problem.”

Of course, a particularly paranoid user can foil this method of attack by simply keeping their phone in their pocket instead of leaving it on the table next to them. As with many of these exciting-sounding methods of cyberattack, this is technically possible to pull off in the wild, but unlikely to appear outside the world of serious espionage and/or spy movies any time soon.

READ MORE HERE